Tenant Data Isolation: Patterns and Anti-Patterns

Explore effective patterns and pitfalls of tenant data isolation in multi-tenant systems to enhance security and compliance.

Jul 30, 2025

Read More

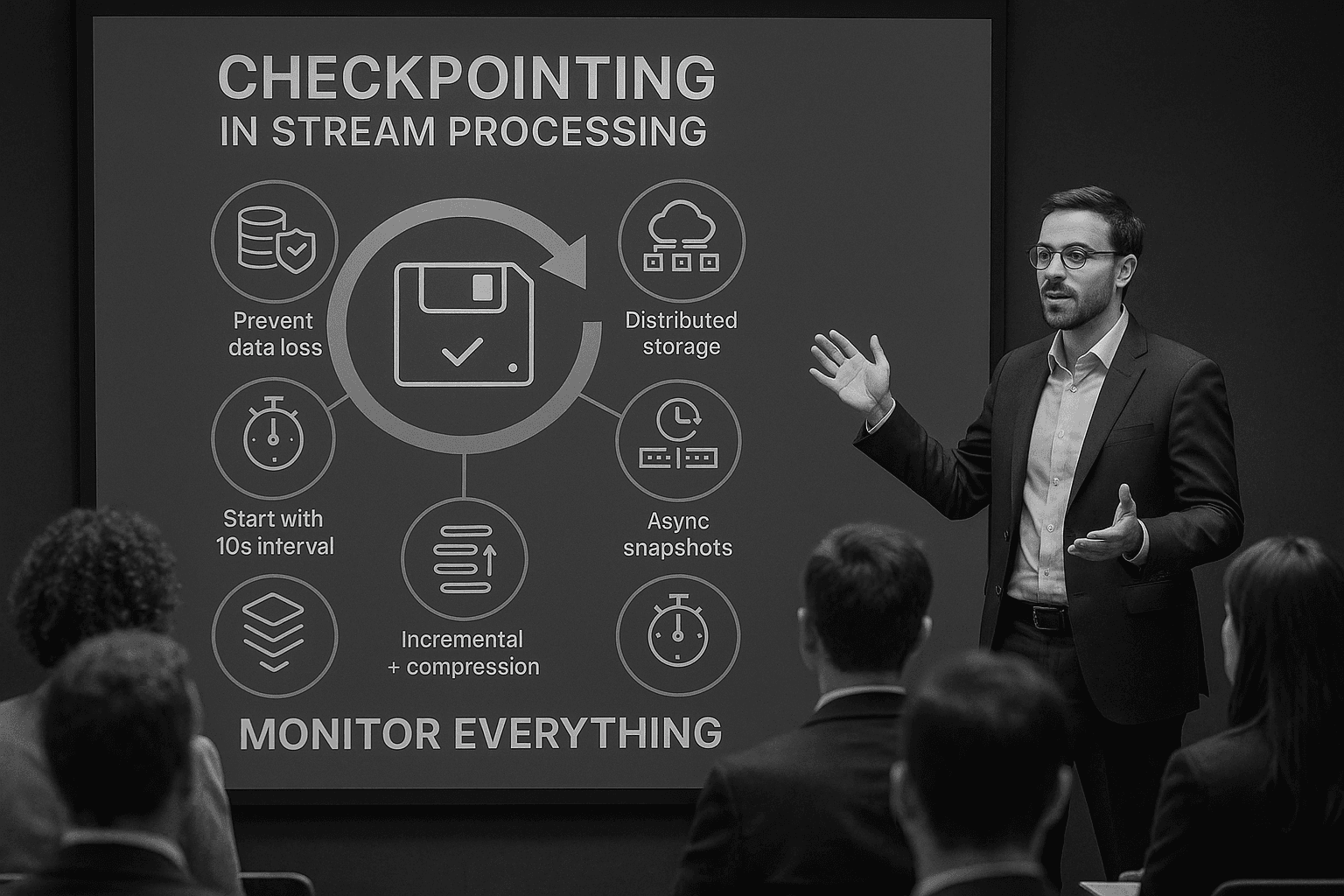

Checkpointing ensures your stream processing system recovers seamlessly after failures, avoiding data loss and duplicate processing. Here's what you need to know:

Pro tip: Asynchronous snapshots and compression can drastically improve efficiency for high-volume systems.

Keep reading to learn how to configure and optimize checkpointing for your specific needs.

To ensure applications can handle failures gracefully and maintain data integrity, checkpointing is built on three key principles: fault tolerance, consistency, and state management. These principles form the backbone of reliable and efficient systems.

Fault tolerance relies on creating periodic snapshots of a system's state, offering a recovery point in case of unexpected failures. By saving the positions of processed data and intermediate results at regular intervals, distributed systems can coordinate snapshots that minimize data loss and prevent duplicate processing.

For example, imagine a fraud detection system analyzing financial transactions. With proper checkpointing, the system can recover accurately, retaining counts of suspicious activities without missing or duplicating transactions after a failure.

The process involves coordination across multiple nodes to capture a consistent system-wide snapshot, rather than isolated snapshots of individual components. While frequent checkpoints help reduce potential data loss, they also introduce overhead, consuming resources and increasing latency.

Consistency guarantees further bolster these recovery strategies, as explained next.

Consistency in checkpointing ensures your system behaves as though failures never occurred. This is especially critical for applications where accuracy cannot be compromised.

Stream processing frameworks like Apache Flink achieve this through coordination mechanisms. They inject stream barriers into data streams, which flow with the records and separate them into sets for the current and next snapshot. These barriers serve as markers to time and coordinate snapshots effectively.

Operators use these barriers to align inputs and separate record sets, ensuring updates from earlier records are committed properly. This coordination enables exactly-once processing semantics, where each record is processed only once, even in the face of failures.

For production environments, storing checkpoints in a highly available filesystem is essential to maintain these consistency guarantees. Modern systems also support unaligned checkpointing, which captures in-flight data as part of the operator state. While this approach reduces latency, it demands more advanced state management to maintain consistency.

Checkpointing's effectiveness hinges on robust state management. In stateful stream processing, systems maintain information about past events to provide context for processing current data, enabling decisions based on historical insights.

The relationship between state management and checkpointing is deeply interconnected. Checkpointing records the state details and processed records, forming a foundation for recovery. However, larger states can slow down checkpointing, increase memory usage, and degrade performance.

To address these challenges, efficient state management employs strategies like minimizing state size by retaining only essential information, using Time-to-Live (TTL) to clean up outdated entries, and leveraging state backends that support incremental checkpoints. These approaches ensure systems can manage large data volumes without compromising performance.

Systems like Apache Samza showcase effective state management by combining high-performance local state operations with state replication across nodes for fault tolerance.

The choice of state backend is also critical. For instance, the RocksDB state backend stores state on disk, enabling it to handle much larger states compared to in-memory options. This decision directly impacts the efficiency of capturing and restoring system state.

Finally, to ensure reliable recovery, stateful operations must remain unchanged between restarts. This highlights the close relationship between state management and checkpointing in maintaining both reliability and data consistency.

Checkpointing plays a crucial role in stream processing by ensuring fault tolerance, consistent state management, and quick recovery. To make it effective, careful configuration and adherence to best practices are essential.

Checkpoint intervals determine how often the system captures its state. Striking the right balance is key - too frequent checkpoints can bog down performance with unnecessary overhead, while infrequent checkpoints risk prolonged recovery times in the event of a failure.

For critical workloads, starting with a 10-second interval is a good baseline. Less critical tasks can often handle intervals as long as 15 minutes. When deciding on the interval, consider factors like processing latency, resource usage, and how critical the application is to your operations.

The storage backend you select greatly influences both the performance of your checkpoints and the reliability of your system. Distributed storage solutions, such as HDFS, Amazon S3, or Google Cloud Storage, are generally the best options. They offer a balance of cost, latency, and reliability, which is crucial for robust checkpointing.

Avoid using local file systems in production environments, as they introduce a single point of failure that can jeopardize recovery. To ensure your chosen backend is performing as expected, use monitoring tools like Prometheus and Grafana to track checkpoint performance regularly.

The configuration of your state backend directly affects how well your system can handle large-scale stateful processing. For example:

HashMapStateBackend provide fast state access but are limited by memory capacity.RocksDBStateBackend can manage larger states but come with the trade-off of higher serialization and disk I/O costs.For applications with significant state requirements, enabling incremental checkpointing in RocksDB can drastically cut down on checkpoint times and storage needs. Here are some key configuration tips:

setWriteBufferSize(64 * 1024 * 1024).setIncremental(true).Consistently monitor metrics like checkpoint duration, state size growth, checkpoint failures, and backpressure. This proactive approach helps you identify and resolve configuration issues, ensuring faster checkpointing and reliable state restoration during failures.

Avoiding common mistakes in checkpointing can save you from unexpected downtime and data loss. Let’s break down some of the most frequent issues and how to address them effectively.

Getting the timing wrong for checkpoint intervals can seriously drag down system performance and reliability. It’s all about finding the sweet spot - too frequent or too infrequent, and you’re in trouble.

If you set checkpoints too close together, your system spends more time saving its state than actually processing data. Imagine checkpoints happening every few seconds - this adds unnecessary overhead, slowing down throughput and increasing latency in high-demand environments.

On the flip side, if checkpoints are too far apart, recovery takes longer, and you risk losing more data. For instance, if a crash happens and the last checkpoint was 30 minutes ago, reprocessing that gap can be a nightmare, especially in systems requiring exactly-once processing.

For non-critical tasks, start with longer intervals and tweak them based on system performance. A good rule of thumb is to configure a checkpoint timeout - usually around 1 minute - to give each checkpoint enough time to finish without clogging resources. Once you’ve nailed down the interval, don’t forget to shore up your storage configuration.

Even the best checkpointing strategy can crumble under poor storage setup. The scary part? These issues often stay hidden until something breaks.

For example, if storage capacity runs out during runtime, your checkpointing will fail. Everything might seem fine until a spike in processing suddenly overwhelms your storage. Similarly, incorrect access permissions can lead to write errors or even corrupt your checkpoint data.

To avoid these pitfalls, stick with reliable distributed storage solutions like HDFS, Amazon S3, or Google Cloud Storage. Protect sensitive checkpoint data with encryption, and always have a backup plan - literally. Redundant copies stored securely can be lifesavers. Regular monitoring is also key - set up alerts for storage thresholds and write failures to catch problems before they snowball.

Beyond timing and storage, maintaining state integrity is crucial. Corrupted state data can derail recovery efforts and lead to inconsistencies, especially in distributed streaming systems.

One common issue is concurrent updates during checkpointing. If snapshots capture state at different times across a distributed setup, you might end up with a recovery state that doesn’t match the full dataset.

To tackle this, configure your system for exactly-once semantics. This ensures every record is processed just once, keeping your data accurate. Incremental checkpointing, especially with a state backend like RocksDB, can also help by saving only what’s changed since the last checkpoint. For instance, in Flink, you can enable this with:

env.setStateBackend(new RocksDBStateBackend("file:///checkpoints", true));

This reduces the amount of data written and lowers the risk of errors. Adding a two-phase commit protocol for your sources and sinks ensures atomicity - either everything succeeds, or nothing does.

Finally, make use of your framework’s savepoint mechanism for regular state backups. These serve as reliable recovery points. Pair this with continuous monitoring using built-in metrics and alerts to catch any anomalies early and keep your system running smoothly.

Once you've mastered the basics of checkpointing, these advanced techniques can take your system's performance and reliability to the next level. They focus on minimizing overhead while ensuring data integrity remains intact.

Compressing incremental checkpoints is a smart way to cut down on storage costs and speed up recovery times. Instead of saving everything, you only store the changes since the last checkpoint - and then compress that data even further.

Studies show that Data Structure-based Incremental Checkpointing (DSIC) can reduce logging time by over 70% and save 40% on storage costs compared to older methods. That’s a game-changer for systems managing large volumes of state data.

When choosing a compression method, consider your system's needs:

"Our findings indicate that Zstd is the most competent compression method under all scenarios and through comparing with an uncompressed approach we point out that compressing the communication data disseminated from the primary replica coupled with the periodic incremental checkpointing algorithm not only decreases the average blocking time up to 5% but it also improves the overall system throughput by 4% compared to the no compression case." - Berkin Guler and Oznur Ozkasap

For real-time applications where speed is paramount, LZ4 is your go-to. On the other hand, batch processing systems can benefit greatly from Zstd's storage savings over time. Pairing compression with asynchronous snapshots can further boost throughput by separating checkpoint creation from main processing tasks.

Asynchronous checkpointing lets your system keep processing new data while checkpoints are created in the background. This reduces delays and improves efficiency.

For example, Databricks reported up to a 25% improvement in average micro-batch duration for streams with large state sizes containing millions of entries when asynchronous checkpointing was enabled. This is especially beneficial for high-volume, stateful applications.

Here’s how it works: while executors handle the checkpoint process in the background, the system continues processing data. Once the checkpoint is complete, the executors report back to the driver.

If you're using Databricks Runtime 10.4 or newer, enabling this feature is simple:

spark.conf.set("spark.databricks.streaming.statefulOperator.asyncCheckpoint.enabled","true")

spark.conf.set("spark.sql.streaming.stateStore.providerClass", "com.databricks.sql.streaming.state.RocksDBStateStoreProvider")

Keep in mind that asynchronous checkpointing is compatible only with RocksDB-based state stores. While recovery may take slightly longer - requiring the reprocessing of two micro-batches instead of one - the performance gains during normal operations often make this trade-off worthwhile.

Every streaming framework comes with its own set of optimization options. Fine-tuning these settings can mean the difference between a sluggish system and a high-performing one.

To keep everything running smoothly, monitor checkpoint performance using your framework's built-in metrics. Set up alerts for failures or unusually long durations - these can serve as early indicators of potential issues. The goal is to strike a balance between checkpoint frequency, storage efficiency, and recovery speed tailored to your specific needs.

Checkpointing plays a crucial role in preventing data loss and ensuring systems can bounce back during failures. The strategies we've discussed here provide the groundwork for building a dependable real-time data processing system.

Start with a 10-second checkpoint interval and fine-tune it based on your system's needs. Balancing checkpoint frequency is key: shorter intervals reduce data loss but increase overhead, while longer intervals may boost performance but lengthen recovery times.

Your configuration choices matter. Carefully select storage options, state management settings, and timeouts - typically, a 1-minute checkpoint timeout is a good starting point. For applications requiring strong consistency, aligned checkpoints are the way to go. On the other hand, non-aligned checkpoints can work well in low-latency scenarios where eventual consistency is acceptable.

Keep an eye on metrics like checkpoint duration, size, and alignment to identify and address potential issues early. By default, the checkpoint failure tolerance is set to 0, meaning your job will fail on the first checkpoint failure. Adjust this setting to match your reliability needs. Monitoring these metrics strengthens the reliability principles we've covered.

Optimization is equally important. Techniques like incremental and asynchronous checkpointing help reduce both overhead and latency, ensuring efficiency under load.

Don’t overlook security and backups. Encrypt sensitive checkpoint data and establish robust backup procedures, including redundant copies stored in secure locations. These measures protect your data and ensure fault tolerance even in the face of infrastructure issues.

Ultimately, effective checkpointing requires aligning your strategy with the specific needs of your application. Regularly monitor performance, fine-tune configurations, and apply optimization techniques to minimize downtime and maintain stability.

"The simplest way to perform streaming analytics is not having to reason about streaming at all." - Tathagata Das [3]

When deciding on the best checkpoint interval, it’s all about balancing your system’s performance needs with its fault tolerance requirements. A common starting point is an interval of 10 seconds. This timeframe strikes a practical balance - offering decent fault tolerance without causing significant overhead.

If you shorten the interval, you might see faster recovery times, but it could come at the cost of higher resource consumption. On the flip side, extending the interval might lower resource usage but increases the risk of losing more data in the event of a failure.

The key is to tailor the interval to your application’s importance and workload. Make sure your checkpointing setup aligns with the performance targets and recovery needs of your system to keep things running smoothly.

Incremental checkpointing with RocksDB brings a host of benefits when managing large application states. By capturing only the changes made since the last checkpoint, it cuts down checkpointing time while also reducing storage and network demands. This efficient method ensures the system keeps running smoothly, even during heavy workloads.

One standout advantage is quicker recovery. With less data to write and read during the checkpointing process, the system can bounce back faster after a failure, keeping downtime to a minimum. Plus, incremental checkpointing allows for more frequent checkpoints, boosting fault tolerance and decreasing the amount of data that needs reprocessing after an issue. It's a smart approach for enhancing both reliability and efficiency in stream processing systems.

To maintain dependable and consistent checkpoints in distributed stream processing, exactly-once processing semantics play a crucial role. This means regularly saving the application's state so it can bounce back from failures without losing or duplicating data. A good example is Apache Flink, which uses a two-phase commit protocol. This ensures all nodes securely save their state before finalizing the checkpoint.

When setting up checkpoints, it’s important to weigh the frequency against the impact on performance. Taking checkpoints more often can minimize potential data loss, but it may also add extra overhead. Striking the right balance is key and should reflect your application's specific reliability requirements. Keep an eye on latency and tweak checkpoint intervals as needed to achieve smooth performance while maintaining fault tolerance.

Need an expert team to provide digital solutions for your business?

Book A Free CallDive into a wealth of knowledge with our unique articles and resources. Stay informed about the latest trends and best practices in the tech industry.

View All articlesGet in Touch

Let's Make It Happen

Get Your Free Quote Today!

Get in Touch

Let's Make It Happen

Get Your Free Quote Today!