Tenant Data Isolation: Patterns and Anti-Patterns

Explore effective patterns and pitfalls of tenant data isolation in multi-tenant systems to enhance security and compliance.

Jul 30, 2025

Read More

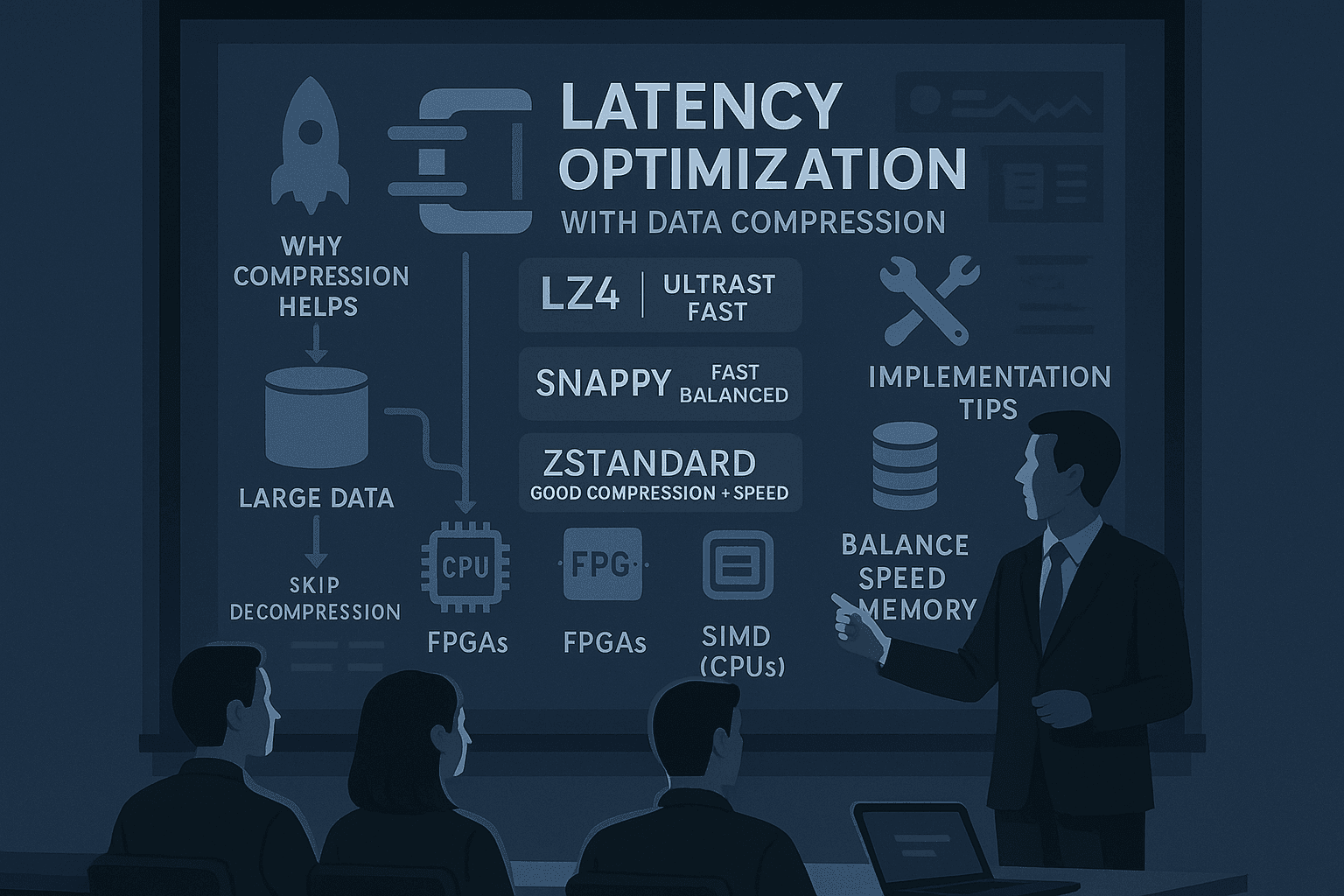

Want faster real-time streaming? Data compression is the key. It reduces the size of data sent over networks, cutting delays in applications like video streaming, online gaming, and financial trading.

Here’s what you need to know:

Quick Tip: Always compress data near the source to save bandwidth and reduce congestion.

Want more details? Keep reading to learn implementation strategies, examples, and tips for choosing the right compression method.

Improving latency in real-time streaming depends heavily on the efficiency of algorithms and the capabilities of the hardware in use. Real-time streaming prioritizes speed over achieving the highest compression ratios. While traditional methods like gzip or bzip2 are great for storage purposes, they tend to fall short in real-time scenarios where every millisecond matters.

Certain algorithms are specifically designed to deliver high-speed compression, making them ideal for real-time streaming:

For even better results, modern hardware can enhance the performance of these algorithms, pushing the boundaries of what’s possible in real-time compression.

Hardware acceleration takes compression to the next level, surpassing the limits of software-based methods:

Beyond speeding up compression, some methods go a step further by allowing systems to work directly with compressed data, bypassing decompression entirely.

One innovative way to cut latency is by processing data in its compressed state, eliminating the need for decompression and saving both time and computational resources.

Practical tools and libraries make it easier to work with compressed data. For instance, Python libraries such as gzip, zipfile, and tarfile let you read compressed files directly, while Pandas can load .csv.gz files into a DataFrame. Command-line utilities like zcat and unzip -p also provide direct access to compressed content without creating temporary decompressed files.

The success of these approaches often depends on choosing compression algorithms that support random access to compressed data. While this may slightly reduce compression efficiency, the trade-off is often worth it in real-time streaming scenarios where latency is a top priority.

This section dives into the practical steps for implementing low-latency compression. The key lies in strategically placing compression steps, fine-tuning code, and balancing speed with resource consumption.

Where you place compression and decompression in your streaming architecture has a huge impact on performance. The most critical stage for these decisions is during data ingestion. Research highlights that efficient serialization, smart compression, and early filtering are essential at this stage.

Compression should occur as close to the data source as possible. This minimizes uncompressed data traveling through the network, reducing congestion. However, avoid compressing data that requires immediate processing to prevent unnecessary delays.

For high-volume streams, especially those with repetitive data patterns, compression is highly effective. For example, a typical streaming pipeline - covering ingestion, transformation, and syncing to a datastore - can process data in under 15 seconds. Adding compression often reduces this time further when network transmission is a major factor.

Pairing compression with early filtering can cut down data volume and latency even more. Smaller datasets are easier to compress and require less computational power.

That said, compression isn’t always a win. For low-volume streams, the time spent compressing data might actually increase latency. In such cases, lightweight algorithms like LZ4 or Snappy are better choices, especially when reducing network costs is more critical than decompression time.

For backend systems in Node.js and Python, using fast compression libraries is key. Below are examples of how to integrate compression into your streaming pipelines.

Node.js Example with LZ4:

const lz4 = require('lz4');

const { Transform } = require('stream');

class LZ4CompressTransform extends Transform {

constructor(options = {}) {

super(options);

this.compressionLevel = options.level || 1; // Fast compression

}

_transform(chunk, encoding, callback) {

try {

const compressed = lz4.encode(chunk);

this.push(compressed);

callback();

} catch (error) {

callback(error);

}

}

}

// Integrate the compression stream into your pipeline

const compressionStream = new LZ4CompressTransform({ level: 1 });

dataStream

.pipe(compressionStream)

.pipe(networkTransport);

Python Example with Snappy:

import snappy

import asyncio

from typing import AsyncGenerator

class StreamCompressor:

def __init__(self, algorithm='snappy'):

self.algorithm = algorithm

async def compress_stream(self, data_stream: AsyncGenerator) -> AsyncGenerator:

async for chunk in data_stream:

compressed_chunk = snappy.compress(chunk)

yield compressed_chunk

async def decompress_stream(self, compressed_stream: AsyncGenerator) -> AsyncGenerator:

async for compressed_chunk in compressed_stream:

decompressed_chunk = snappy.decompress(compressed_chunk)

yield decompressed_chunk

# Usage example

compressor = StreamCompressor()

async for compressed_data in compressor.compress_stream(incoming_data):

await send_to_network(compressed_data)

Real-time Compression with Error Checking:

To ensure data integrity, especially in real-time streaming, error-checking is essential. Here’s an example using Python:

import zstandard as zstd

import hashlib

class RealtimeCompressor:

def __init__(self, compression_level=1):

self.compressor = zstd.ZstdCompressor(level=compression_level)

self.decompressor = zstd.ZstdDecompressor()

def compress_with_integrity(self, data: bytes) -> tuple:

# Calculate checksum before compression

checksum = hashlib.md5(data).hexdigest()

compressed = self.compressor.compress(data)

return compressed, checksum

def decompress_with_verification(self, compressed_data: bytes, expected_checksum: str) -> bytes:

decompressed = self.decompressor.decompress(compressed_data)

actual_checksum = hashlib.md5(decompressed).hexdigest()

if actual_checksum != expected_checksum:

raise ValueError("Data integrity check failed")

return decompressed

Incorporating error-checking routines like this ensures that data remains intact, even after transmission.

Achieving the right balance between memory usage, compression speed, and compression ratio depends on your specific needs and real-world performance metrics. Faster algorithms like RLE are often better suited for real-time applications, even if they don’t compress as much.

Match the algorithm to your data patterns. For instance:

Processing data in smaller chunks helps manage memory usage. Adjust buffer sizes based on your system’s resources and the size of the data being processed. For large streams, techniques that maintain a fixed memory footprint are particularly useful.

Compression levels should be dynamic. During peak traffic, prioritize speed with lower compression settings. During quieter periods, increase compression ratios to save on storage and bandwidth.

Monitoring is non-negotiable. Track key metrics like compression ratios, processing times, memory usage, and latency. Set up alerts for when compression overhead exceeds acceptable limits, signaling the need to tweak algorithms or settings.

Lastly, schedule compression during low-power states to save energy. This is especially important for mobile and edge computing setups where power efficiency directly impacts performance and costs.

After applying compression strategies, it's crucial to measure latency accurately to confirm any performance gains. Monitoring key metrics will help verify the effectiveness of your compression methods.

When evaluating latency, focus on metrics like response time, throughput, and network latency. Response time measures how long it takes for a system to respond to a request, while throughput tracks the number of requests handled in a specific time frame, often expressed in bytes or transactions per second.

For real-time streaming systems, average latency time is particularly critical, as it shows how quickly your system reacts to requests, typically measured in milliseconds. Compression can significantly reduce network latency, which accounts for transmission delays.

On the client side, metrics such as Time to First Byte (TTFB), page load time, and rendering time provide insights into the user experience. One key metric, Time to Interact (TTI), indicates when users can start engaging with your application.

Server-side metrics are equally important. For instance, CPU utilization reveals how much processing power is consumed during compression and decompression, while memory utilization tracks the RAM usage of your compression algorithms. Additionally, disk I/O and disk capacity metrics can highlight storage-related impacts of your compression strategy.

Another critical metric is the error rate, which measures the percentage of failed requests or those that didn't receive a response. Aggressive compression settings or unstable networks can sometimes lead to errors.

These metrics form the foundation for deploying specialized testing tools.

To track compression performance in real-time, monitoring tools are indispensable. These tools can continuously measure metrics like latency, packet loss, jitter, and throughput. Setting performance baselines and alerts ensures you can quickly identify and address any issues.

For ultra-low latency applications, such as video streaming, advanced monitoring techniques are essential. These include ping tests for connectivity, Round-Trip Time (RTT) for network performance, and end-to-end latency measurements for a comprehensive system evaluation.

Tools designed for frame analysis can help assess how compression affects individual data packets. Meanwhile, dedicated latency monitoring solutions provide ongoing oversight of streaming performance, often with built-in caching features to complement your compression approach.

Continuous network traffic monitoring is also vital. It identifies congestion sources and evaluates whether latency improvements stem from compression or reduced network traffic. Implementing Quality of Service (QoS) policies can prioritize latency-sensitive applications, ensuring compressed streams receive the necessary bandwidth.

These tools can also simulate real-world conditions, enabling more accurate performance evaluations.

Controlled testing is key to understanding how compression affects latency. Use real-world input data from your application instead of synthetic data to ensure meaningful results.

A/B testing is a reliable method for comparison. By running the same workloads through both compressed and uncompressed pipelines, you can measure identical metrics and isolate the impact of compression.

Before implementing compression, establish a latency baseline by documenting your system's current performance under various load conditions, including peak traffic and different data types.

| Concurrency Setting | Throughput Impact | Latency Impact |

|---|---|---|

| Low Concurrency | Lower overall throughput | Faster single-request responses |

| Moderate Concurrency | Balanced throughput and latency | Acceptable response times for most users |

| High Concurrency | Maximizes total requests served | Slight increase in response time per request |

Testing across different concurrency levels will help you understand compression performance. Additionally, calculating the compression ratio (CR) - the size of uncompressed files compared to compressed ones - can reveal the balance between compression efficiency and processing overhead.

For image or video data, visually inspect the quality of compressed files using software decoders or display tools. In applications like online gaming, latency often needs to stay below 50 milliseconds to meet performance benchmarks. These benchmarks can guide you in assessing whether your compression improvements align with your application's requirements.

As your system grows, regular load testing and performance tuning are essential. Compression strategies that work today may need adjustments as data volumes and processing demands increase. Continuous monitoring and fine-tuning will ensure you maintain optimal performance over time.

When deciding whether real-time data compression is the right fit for your system, it's crucial to weigh its benefits against its limitations. While it can significantly boost efficiency, it's not a one-size-fits-all solution.

Real-time data compression has clear advantages, but it also comes with challenges. Here's a breakdown of the key points:

| Benefits | Drawbacks |

|---|---|

| Reduced bandwidth usage - Can lower storage needs by up to 90% | CPU overhead - Compression and decompression require processing power |

| Faster transmission - Compressing 1 GB into 2.3 MB cuts transmission time from 30 seconds to 5 seconds | Implementation complexity - Needs careful planning and expertise |

| Lower storage costs - Smaller file sizes mean reduced infrastructure expenses | Potential data loss - Lossy methods can degrade data quality |

| Improved user experience - Faster loading times can boost engagement | Compatibility issues - Not all systems support every compression format |

| Better network efficiency - Reduces congestion and improves performance | Recovery challenges - Corrupted compressed files are harder to restore |

The main hurdle is often the computational load. As Barracuda Networks notes:

"The main disadvantage of data compression is the increased use of computing resources to apply compression to the relevant data. Because of this, compression vendors prioritize speed and resource efficiency optimizations in order to minimize the impact of intensive compression tasks."

Lossless compression is ideal for preserving data accuracy, while lossy compression achieves higher reductions by discarding less critical information. However, some algorithms come with licensing fees, and in some cases, further compression yields minimal gains despite increased processing demands.

Compression isn't always the answer. Recognizing when it won’t be effective can save time and resources.

The right approach depends on your data, infrastructure, and goals. Here’s how to make an informed choice:

Ultimately, the decision comes down to balancing compression ratio, processing speed, and data integrity. By aligning your compression strategy with your system's specific needs, you can achieve optimal performance without compromising reliability.

Reducing latency through smart data compression is an ongoing process that requires careful planning and consistent refinement. The strategies outlined in this guide can lead to noticeable performance improvements when tailored to your specific needs.

Lowering latency starts with minimizing data size while maintaining quality. This principle shapes several essential practices:

The stakes are high: if a website takes more than 5.7 seconds to load, conversion rates can plummet to below 0.6%. Even a one-second delay in data processing can lead to a 2.11% drop in conversions on average. These numbers highlight the importance of implementing these practices effectively.

To put these strategies into action, start with a clear performance baseline. Monitor latency using metrics like absolute response times and percentiles. Gather data from both your application and infrastructure to pinpoint where delays originate.

Latency optimization is a complex process with many moving parts. Regular monitoring and iterative improvements are key to staying ahead.

Navigating the technical challenges of building low-latency systems can be daunting. That’s where Propelius Technologies steps in. With expertise in real-time application development using tools like React.js, Node.js, and advanced cloud platforms, we specialize in creating high-performance systems optimized for speed and efficiency.

Our team has delivered over 100 projects, mastering the art of streamlining data pipelines for minimal latency. Whether you need a complete solution or on-demand engineering support, we’re equipped to help you implement effective compression strategies and optimize your infrastructure.

Through our 90-day MVP sprint, we can quickly launch your optimized streaming system while sharing the implementation risks. Our developers are well-versed in the latest real-time processing technologies and can guide you through decisions on compression algorithms, infrastructure tweaks, and performance tracking.

When choosing a compression algorithm for real-time streaming, you need to consider a few important factors. First, determine the type of data being streamed - whether it's video, audio, or another format. Different algorithms are tailored to specific needs. For example, H.264 and AV1 are popular choices for video. While both provide efficient compression and maintain good quality, AV1 typically delivers better compression ratios, making it a strong option for modern streaming applications.

Another critical factor is deciding between lossy and lossless compression. Lossy compression reduces file size by removing some data, which helps with smoother playback and quicker load times. On the other hand, lossless compression keeps all the original data intact but results in larger file sizes. The choice depends on the balance you need between quality and performance.

Lastly, think about the computational resources at your disposal. More advanced algorithms often require greater processing power, which could impact real-time performance if your hardware isn't up to the task. Balancing these considerations is key to selecting the right compression method for your streaming needs.

Hardware-accelerated compression brings some hurdles, particularly in real-time streaming. One major concern is latency - while compression speeds up processing, the time spent transferring data to and from the hardware can cancel out those benefits. Another issue is the lack of adaptability in many accelerators, which makes it tough to switch between algorithms or handle diverse data types effectively.

To tackle these problems, developers can focus on fine-tuning data transfer protocols to cut down on latency. Pairing hardware with dynamic algorithms that adjust to the specific data being compressed can also boost adaptability and performance, ensuring a smoother experience across various streaming applications.

To evaluate how data compression impacts latency in your streaming system, the first step is to establish a baseline. Start by measuring the initial latency without any compression. A common approach is to use Round Trip Time (RTT), which calculates the time it takes for data to travel from sender to receiver and back again, including processing time.

Afterward, introduce a data compression method suitable for your streaming data. Once the compression is in place, measure the latency again using the same technique. Compare these measurements to see how compression influences system performance. Be sure to also check the compression ratio - calculated as the original data size divided by the compressed size - to gauge the efficiency of the compression.

It’s important to remember that factors like network delays, buffer sizes, and serialization times can also affect latency. Isolating these variables will give you a clearer picture of how compression specifically impacts your system's performance.

Need an expert team to provide digital solutions for your business?

Book A Free CallDive into a wealth of knowledge with our unique articles and resources. Stay informed about the latest trends and best practices in the tech industry.

View All articlesGet in Touch

Let's Make It Happen

Get Your Free Quote Today!

Get in Touch

Let's Make It Happen

Get Your Free Quote Today!